Introduction

🔥 [ComfyUI Special Workflow | AI Dabaichui Dance Generator]

Based on the Wan2.1 image-generated video model and customized Lora technology, this ComfyUI workflow is designed to generate the TikTok hit "Dabaichui Dance"! Upload the character picture, AI automatically analyzes the action logic, and can quickly output high-precision 3D dance videos, perfectly matching the platform's popular trends.

✅ Accurate positioning, rejecting redundancy:

1️⃣ Vertical function: Lora model deeply trains the Dabaichui dance action library, and the generated results are 100% focused on this style;

2️⃣ Minimalist process: ComfyUI node-based operation, zero parameter debugging, drag and drop pictures to generate;

🚀 No need for trial and error, hit the hit directly:

The "Dabaichui Assembly Line" designed for content creators saves time in action design and makes traffic acquisition one step faster!

https://civitai.com/models/1365589/phut-hon-dance?modelVersionId=1542806

Wan-Video

a comprehensive and open suite of video foundation models that pushes the boundaries of video generation. Wan2.1 offers these key features:

- 👍 SOTA Performance: Wan2.1 consistently outperforms existing open-source models and state-of-the-art commercial solutions across multiple benchmarks.

- 👍 Supports Consumer-grade GPUs: The T2V-1.3B model requires only 8.19 GB VRAM, making it compatible with almost all consumer-grade GPUs. It can generate a 5-second 480P video on an RTX 4090 in about 4 minutes (without optimization techniques like quantization). Its performance is even comparable to some closed-source models.

- 👍 Multiple Tasks: Wan2.1 excels in Text-to-Video, Image-to-Video, Video Editing, Text-to-Image, and Video-to-Audio, advancing the field of video generation.

- 👍 Visual Text Generation: Wan2.1 is the first video model capable of generating both Chinese and English text, featuring robust text generation that enhances its practical applications.

- 👍 Powerful Video VAE: Wan-VAE delivers exceptional efficiency and performance, encoding and decoding 1080P videos of any length while preserving temporal information, making it an ideal foundation for video and image generation.

https://github.com/Wan-Video/Wan2.1

https://huggingface.co/Kijai/WanVideo_comfy

Recommended machine:Ultra-PRO

Workflow Overview

-4f08dd2c-ffde-41b8-84e9-e151b3d3b42c.png)

How to use this workflow

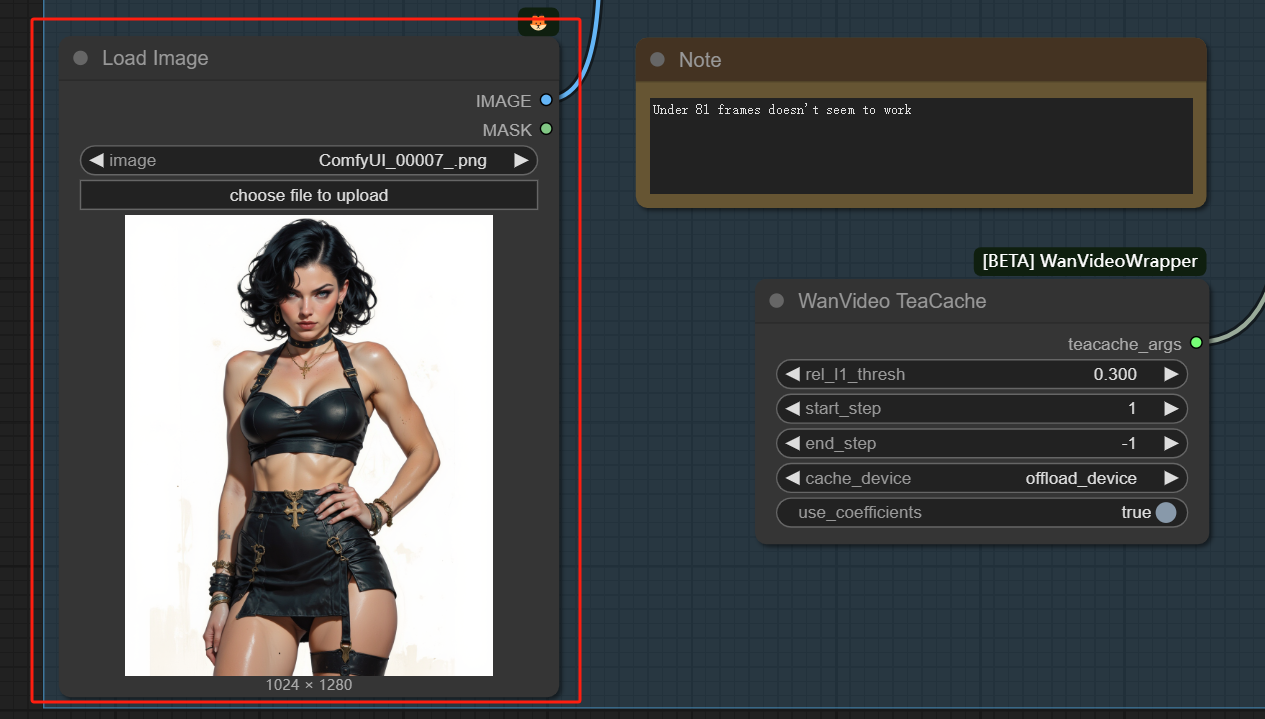

Step 1: Load Image

Step 2: Adjust Video parameters

Under 81 frames doesn't seem to work

Step 3: Adjusting Lora weights

Step 4: Input the Prompt

No need to describe the entire picture in detail, just enter key information such as lens, action, etc.

Lora Target words: dabaichui

Step 5: Set the number of sampler steps

When I was testing, the effect of generating a two-dimensional video was very good when step=30, and the real person would have a bad face; when step=50, the real person's facial texture gradually became clear. If the effect is not as expected, please try again.

Step 6: Get Video

You can change the video length by setting frame_rate or num_frames (in WanVideo Empty Embeds). Video length = num_frames/frame_rate